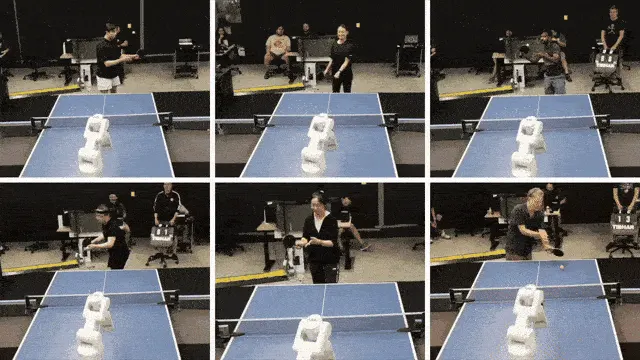

– Google’s DeepMind AI, known for mastering Go and solving protein structures, has developed a robotic system that can beat human players at table tennis.

– The system uses a customized DeepMind neural network trained on real and simulated table tennis data to control a robotic arm. It learns strategies and skills like topspin serves.

– In experiments, the AI robot arm beat all beginner human players and about 55% of intermediate players. It would be ranked as a solid amateur player.

– However, it struggled against advanced players and with certain shots like high balls and backhands. This shows its abilities are still limited compared to top humans.

– Playing against the AI was described as “fun” and “engaging” even when players lost. The approach could help robots respond quickly in dynamic physical environments.

– The research demonstrates continued progress in developing AI that can skillfully interact with and manipulate real world objects like a robotic arm playing a sport. But humans still significantly outperform current AI in complex domains like table tennis.

Source: Live Science